Workshop 4: Build OpenAI Virtual Assistant

OpenAI Assistants API Beta https://platform.openai.com/docs/assistants/overview

The Assistants API allows you to build AI assistants within your own applications. An Assistant has instructions and can leverage models, tools, and knowledge to respond to user queries. The Assistants API currently supports three types of tools: Code Interpreter, Retrieval, and Function calling. In the future, we plan to release more OpenAI-built tools, and allow you to provide your own tools on our platform.

You can explore the capabilities of the Assistants API using the Assistants playground or by building a step-by-step integration outlined in this guide. At a high level, a typical integration of the Assistants API has the following flow:

Create an Assistant in the API by defining its custom instructions and picking a model. If helpful, enable tools like Code Interpreter, Retrieval, and Function calling.

Create a Thread when a user starts a conversation.

Add Messages to the Thread as the user ask questions.

Run the Assistant on the Thread to trigger responses. This automatically calls the relevant tools.

The Assistants API is in beta and we are actively working on adding more functionality. Share your feedback in our Developer Forum!Calls to the Assistants API require that you pass a beta HTTP header. This is handled automatically if you’re using OpenAI’s official Python or Node.js SDKs.

OpenAI-Beta: assistants=v1This starter guide walks through the key steps to create and run an Assistant that uses Code Interpreter.

In addition to the Assistants API, we also provide an Assistants playground (sign in required). The playground is a great way to explore the capabilities of the Assistants API and learn how to build your own Assistant without writing any code.

An Assistant represents an entity that can be configured to respond to users’ Messages using several parameters like:

Instructions: how the Assistant and model should behave or respond

Model: you can specify any GPT-3.5 or GPT-4 models, including fine-tuned models. The Retrieval tool requires

gpt-3.5-turbo-1106andgpt-4-1106-previewmodels.Tools: the API supports Code Interpreter and Retrieval that are built and hosted by OpenAI.

Functions: the API allows you to define custom function signatures, with similar behavior as our function calling feature.

In this example, we're creating an Assistant that is a personal math tutor, with the Code Interpreter tool enabled.

pythonSelect librarypythonnode.jscurl Copy

A Thread represents a conversation. We recommend creating one Thread per user as soon as the user initiates the conversation. Pass any user-specific context and files in this thread by creating Messages.

pythonSelect librarypythonnode.jscurlCopy

Threads don’t have a size limit. You can add as many Messages as you want to a Thread. The Assistant will ensure that requests to the model fit within the maximum context window, using relevant optimization techniques such as truncation which we have tested extensively with ChatGPT. When you use the Assistants API, you delegate control over how many input tokens are passed to the model for any given Run, this means you have less control over the cost of running your Assistant in some cases but do not have to deal with the complexity of managing the context window yourself.

Step 3: Add a Message to a Thread

A Message contains text, and optionally any files that you allow the user to upload. Messages need to be added to a specific Thread. Adding images via message objects like in Chat Completions using GPT-4 with Vision is not supported today, but we plan to add support for them in the coming months. You can still upload images and have them processes via retrieval.

pythonSelect librarypythonnode.jscurlCopy

Now if you list the Messages in a Thread, you will see that this message has been appended.

For the Assistant to respond to the user message, you need to create a Run. This makes the Assistant read the Thread and decide whether to call tools (if they are enabled) or simply use the model to best answer the query. As the run progresses, the assistant appends Messages to the thread with the role="assistant". The Assistant will also automatically decide what previous Messages to include in the context window for the model. This has both an impact on pricing as well as model performance. The current approach has been optimized based on what we learned building ChatGPT and will likely evolve over time.

You can optionally pass new instructions to the Assistant while creating the Run but note that these instructions override the default instructions of the Assistant.

pythonSelect librarypythonnode.jscurlCopy

By default, a Run goes into the queued state. You can periodically retrieve the Run to check on its status to see if it has moved to completed.

pythonSelect librarypythonnode.jscurlCopy assistant = client.beta.assistants.create( name="Math Tutor", instructions="You are a personal math tutor. Write and run code to answer math questions.", tools=[{"type": "code_interpreter"}], model="gpt-4-1106-preview" )

Step 6: Display the Assistant's Response

Once the Run completes, you can list the Messages added to the Thread by the Assistant.

pythonSelect librarypythonnode.jscurlCopy

And finally, display them to the user! During this Run, the Assistant added two new Messages to the Thread. Here is an example of what that might look like:

user

I need to solve the equation 3x + 11 = 14. Can you help me?

assistant

Certainly, Jane Doe. To solve the equation (3x + 11 = 14) for (x), you'll want to isolate (x) on one side of the equation. Here's how you can do that:

Subtract 11 from both sides of the equation to get

(3x = 3).Then, divide both sides by 3 to solve for

(x).

Let me calculate the value of (x) for you.

assistant

The solution to the equation (3x + 11 = 14) is (x = 1).

You can also retrieve the Run Steps of this Run if you'd like to explore or display the inner workings of the Assistant and its tools. Retrieval with Open AI Playground Author: Madhav Arora https://medium.com/@madhavarora1988/retrieval-with-open-ai-playground-c462dcf1d99a

What is Retrieval ?

It is a tool that provides access to uploaded PDF documents for your assistant and uses RAG to fetch information from the documents. It is a popular and cost-effective way to enhance the information available to the LLM, which is limited by the time of its training. Several startups were built on RAG implementation, and after this feature is integrated into the Assistant API itself, they have most certainly lost some of their unique selling points (USP)

Creating the Assistant:

Go to the Playground and login with your Open AI Account

Go to Assistants section from the left pane, and click on create button in the top right corner

3. Enter the details like Name, Instructions, Model and select Retrieval in the tools section.

4. In the Files section click on upload and select a file that you want to analyze, I have selected a research paper which was released on 14th November just to make sure that Model won’t have it as part of its training data.

5. Once we have the Assistant created, let’s test it.

Prompting Time

Prompt 1:

Response:

There is something interesting in the above response, if you take a look it provided “[1]” at the end of the response, which if you hover over,displays the complete abstract(as it is) in the paper. The abstract is, anyway, a summary for the paper, and by including it in response, it’s providing an exact summary, that is already available in the paper(by the authors). It could be a good metric to evaluate the summary provided by the Assistant, which in my case I found to be pretty good.

Prompt 2:

Response

This one is the famous “explain like I am five” construct in which your modal would simplify the text and simplify and explain it to you like you are five.

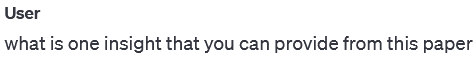

Prompt 3:

Response

Here, I wanted to check if it would be able to access the internet, and it can’t (as expected). So, it retrieved the reference papers mentioned in the research, which seems to be accurate. I think for requests where internet access is an essential capability, agent-like constructs are more promising. I recently wrote an article(Link) regarding AutoGen, which provides agents with the capability to access the internet, I think that capability would be more suitable for this use case.

Prompt 4:

Response

When you have a multitude of research papers to read, this prompt can skim through key insights. You can then use these insights to filter out the research that you need to spend time on and skip the ones that don’t meet your specific criteria.

Conclusion

This is an amazing capability, and I can see how it can be used in a plethora of use cases, where we have our personal knowledge bases, and we would like to use the LLM capabilities to provide some structure to the knowledge that they contain. It is equally beneficial for Organizations(for help articles) or Individuals(for journal notes), who want to get access to the capability without actually training LLM with their data, and training is anyways far more expensive(at least for now) and requires much more technical know how than this solution.

Last updated